Don’t Assume AI is Killing News Sites

Author:

Vanessa Otero

Date:

09/25/2025

The reports of news sites’ deaths are greatly exaggerated. The freakout is understandable: the reputable Wall Street Journal itself recently wrote the alarming headline “News Sites Are Getting Crushed by Google’s New AI Tools.” The first line: “The AI armageddon is here for online news publishers.” (Related: reliable outlets aren’t immune from occasionally publishing sensationalistic content with weak analysis)

Combine that with other alarming-sounding stats, like Cloudflare’s reports about how AI companies have large “crawls to referrals” ratios: Anthropic crawled 70,900 pages for every one (1) referral, OpenAI 1,600 to 1, Perplexity 202,400 to 1, and even Google 9.4 to 1. This highly technical blog highlights real issues, such as resource burdens and the fairness of exchange between AI companies and website publishers. But it’s sort of easy for a layperson to misinterpret these ratios to think it means something like “for every 1,600 chats in ChatGPT that could have gone to a news site, only one click goes to that site,” which is not what the crawl-to-referral ratio means.

Other writers have piled on, citing overall declines in web traffic to certain publishers as proof that news sites and perhaps the whole internet itself is dying! And it follows that ad companies who use that internet might be dying, too!

AdWeek’s headline reads “As AI Search Threatens Open-Web Ad Supply, DSPs Face a Reckoning.” It starts:

“As AI tools like ChatGPT and Google’s AI Overviews keep users on their platforms—and away from publishers—it’s cutting into the open-web ad inventory that demand-side platforms such as The Trade Desk and Viant rely on.”

The data used to support this assertion: declines in overall traffic to certain news sites:

“In July, CNN’s website traffic fell about 30% from a year earlier, while Business Insider and HuffPost saw drops closer to 40%, according to Similarweb.”

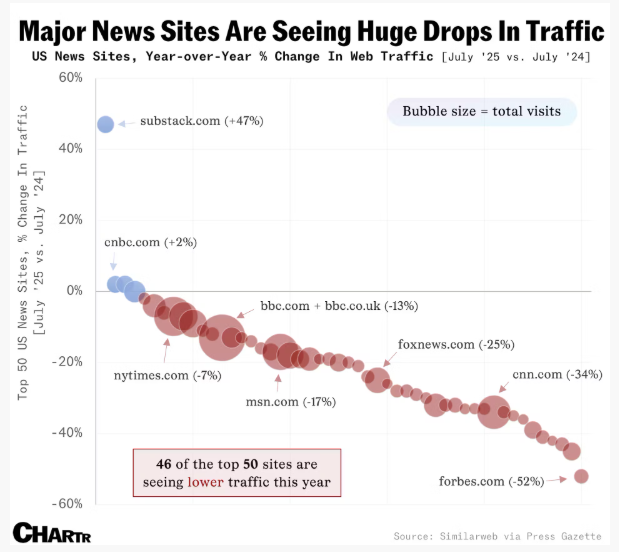

Another blog circulating on LinkedIn is about a couple of publishers suing Google for using their content for the Google AI summaries (which is one issue about improper use/copyright/unfair compensation). But it focuses more on the related issue of traffic drop harm. And it makes the same argument: overall traffic to these news sites is down, so that means Google’s AI is causing the drop. The proof? This graphic.

One more: an article in Digiday reports data from the publisher trade group Digital Content Next. The headline: “Google AI Overviews linked to 25% drop in publisher referral traffic, new data shows.” The article itself says the DCN’s data reveals drops between 1% and 25%, with the median at 10%, so, not the greatest headline. The article links, but quickly dismisses, a Google blog refuting everyone’s panicked conclusions about traffic declines. I’m not the president of Google’s fan club, but the rest of my article here will support a lot of the points in that Google explanation.

At this point, folks who have read a few of these kinds of headlines might just be taking it as an established fact that AI is currently damaging, and will ultimately kill, all news sites, but if you tease apart the multiple concepts these articles mash together, that’s not a foregone conclusion at all. Let’s make some distinctions.

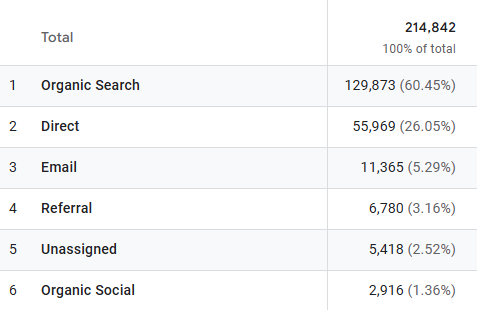

First, it’s important to distinguish when articles are talking about either 1) “organic search traffic” and 2) “overall traffic.” Since Google has historically dominated search, any reference to “organic search traffic” pretty much means a link clicked after a Google search. There’s other types of traffic, like “direct traffic,” which means someone typed your URL in the browser. There’s “referral” traffic, which means someone clicked on your link from someone else’s site (maybe from a site that cited yours, or from ChatGPT). Every site has a mix of what percentage comes from where. For example, on adfontesmedia.com, about 60% of our traffic in the last month is from organic search (Google) and 26% is direct (people typing in the URL):

Maybe I should be scared that so much of my traffic is from Google search, but let’s reserve judgment on that, because it is 60% this year, but that’s up from 53% the same month last year (i.e., AI hasn’t lowered the percentage of my organic search (Google) traffic since last year). And overall traffic is up 50%, so more people are visiting our site and not just because of Google searches.

Other sites have different mixes of organic search, direct, referral, and other traffic. Some are very highly reliant on organic search (Google) and others less so. If most of your traffic is from organic search and organic search drops a ton, your overall traffic will drop a ton. But if you have a lot more direct or referral traffic than organic search traffic, and your organic search drops, your overall traffic might go down very little, if at all.

Second, let’s distinguish between declines in website traffic that are blamed on either 1) Google AI summaries, and 2) all generative AI chatbots generally. Some of the articles blame Google AI overviews, citing a Pew Study that shows that when you Google something and Google shows you an AI overview (which it only did 18% of the time in the study), users were about half as likely to click on a link (15% vs 8% clicked on a link).

But declines to overall website traffic certainly could be attributable to all AI chatbots generally; it makes intuitive sense to users of ChatGPT and Perplexity (and Google AI overviews) that you often go to them to ask a question rather than Google search, and you often don’t need to click on any links once you get your initial answer.. Declining website visits (both organic search and overall) could be the fault of all of these AI searches together, not just Google’s own in-browser AI summaries.

Third, let’s distinguish between the term “publishers,” and “news sites.” “Publishers” can refer to producers of any internet content, from high-quality news sites to producers of made-for-advertising sites and other low-quality internet sites. “Publishers” means the whole internet. “News sites” are a specific subset. DCN’s data cited in the Digiday article is one of the few studies that makes this distinction. And notably, the news sites were only down 7% and the non-news sites were down 14% — about half as much.

Back to the causes of falling traffic and the evidence therefor:

If organic (Google) search traffic declines to news sites are Google AI’s fault, why isn’t organic search traffic to ALL news sites down equally?

And if the overall traffic declines to news sites are all AI’s fault, why isn’t traffic to ALL news sites down equally?

And why would traffic be down twice as much (14% vs 7%) for DCN’s non-news publishers compared to news publishers?

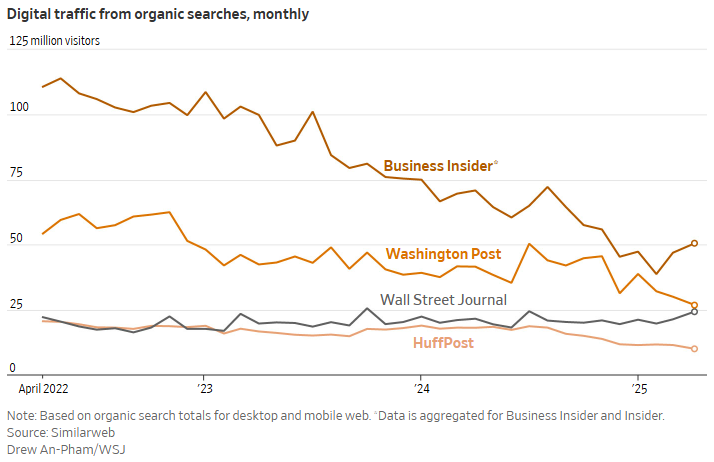

Regarding organic search (Google) declines, in the WSJ graphic, this image:

shows a huge drop to Business Insider’s organic search traffic (the basis of the story). And a noticeable decline to WaPo’s. A little to HuffPost’s. But WSJ’s is actually UP! Literally one of the four examples – the publication stating that AI armageddon is here – has experienced no drop in traffic from organic searches. Consider that the drops in organic searches have a lot to do with many other factors at a publication, which I’ll explore in a minute.

Regarding overall traffic, this image (repeated from above):

shows a bunch of news sites with lower traffic in July 2025 compared to July 2024, but what about CNBC and apparently three out of the top 50 (6%) of the sites that are up? Some are down, but not a ton, like NYT. Leaders I’ve spoken to at WSJ, Newsweek and Time, among others, have indicated their overall traffic is up. But yeah, looks like it really sucks for Forbes, CNN, and Fox News!

Just pointing to “AI exists” and “most news site traffic is down from last year” is a big fat correlation=causation sandwich. These are stats but not a study. Other factors are not accounted for, like the fact that news site traffic is almost always down YoY from a presidential election year. And that Americans are experiencing record news fatigue. And the media landscape is fragmented and has changed a lot, not just in the past year, but over several years, with many new readers turning to individual news creators instead. I mean, look at the huge increase to Substack, right in this graph! Maybe the answer is there! Maybe all the traffic that used to go to the red dotted news sources now goes to Substack. Because you know what there is a lot of over on Substack? News.

But these articles are right that Google referral traffic certainly IS falling for many websites, and it falls most when Google includes an AI overview, which it does on a growing portion of searches.

Several reports cite Google’s statement in a recent court filing that the open web is in “rapid decline” as proof that news sites specifically are screwed. Is the open web in “rapid decline” because of AI? Maybe the really large shitty parts of the internet (which Google largely created through incentivization), but not necessarily the good parts.

There are likely several reasons the declines (or growth) in both organic search traffic and overall traffic due to the existence of AI vary so much from site to site, and they have a lot to do with the content of the site and how it is set up.

And there is another way to mentally segment the different types of internet content that could explain why, in the age of AI, traffic for some sites is down but not for others.

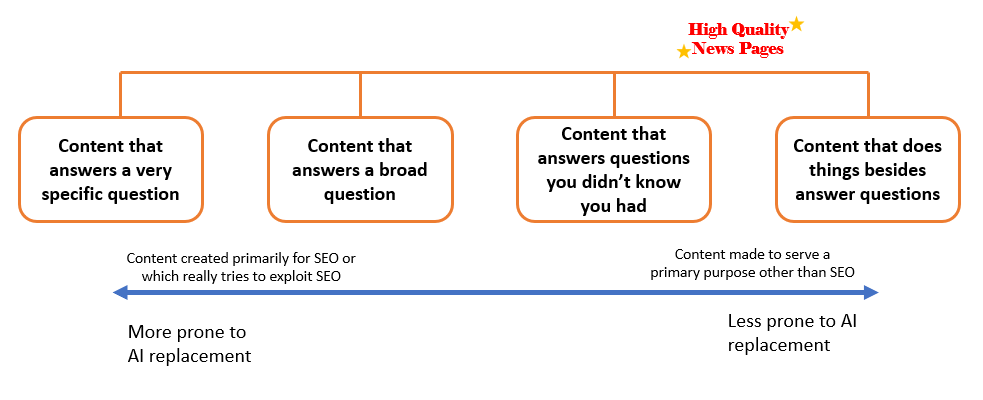

Remember, AI chatbots are primarily question-answering machines. Types of content out there can be classified on a continuum as follows:

- Content that answers a very specific question

- Content that answers a broad question

- Content that answers questions you didn’t know to ask

- Content that does things or gives you something besides answering questions

You can intuit that content which answers 1) very specific questions, or even some 2) broad questions is most likely to be replaced by a question-answering chatbot interface. But a high-quality news site answers a very broad question, which is “what is going on today that I should know” and 3) questions you didn’t know you had, like about what surprising or unexpected things just happened, or about things that were uncovered from the past (an investigative report). They also are 4) content that does things besides answer questions, like providing a nicely organized, curated, visual layout for you to select what information you would like to consume from a broad menu of possibilities.

Sure, you could ask an AI chatbot “what is the news today” and get some of that information, but it’s not a very good interface compared to the layout of a good news site (or app). And when you go to a news site or app, it is usually curated around a type of news. So your broad unstated question when you visit WSJ is essentially “what’s going on in the financial markets, US politics, and international conflicts,” and your broad unstated question when you visit your local news app is essentially “what is going on in my area, including the weather, traffic, and sports?”

The “unstated question” is key. Though many people do use AI for “seeking information,” news-site doomers underestimate how much we like to get information without having to ask a question to get it.

And just because AI can answer a basic question about something doesn’t mean users prefer that format for the information. You can ask ChatGPT how reliable or biased a news source is, and it will give you a text answer based in part on data it scraped off Ad Fontes Media’s site. It will even try to draw it for you on a chart! (poser). But you still can’t download the Media Bias Chart image or search our Interactive one — you still have to come to our site for those experiences. Our organic search and direct traffic therefore remain resilient in the age of AI search. High-quality news sites with nice user experiences and relationships with their audiences are the same way.

Some news sites’ traffic will fall a lot compared to others, though. And traffic to some non-news publishers’ sites will fall a lot compared to others. The main differentiator between which sites will get almost totally replaced by AI and the ones that will take a small traffic hit but remain strong is how dependent they currently are on answering specific questions and being highly optimized for SEO.

Since the beginning of the internet there have been many good reasons to create a website — to promote your business, to provide people information, to report news, to entertain people, etc. But Google’s existence has incentivized the creation of so many “content sites” just to make money from the site traffic itself, which is why there is a huge “long tail” of the internet. A lot of that (but not all) is content that answers a specific question or is responsive to a certain topic search. Search engine optimization became its own money-making enterprise/marketing specialty field over the last 20 years because of Google’s power to send traffic to sites. Some site creators would even start with trying to find highly searched topics and then create results to respond to those. Part of the SEO game for ad-supported “content sites” (as opposed to, say, a business’s website that doesn’t run ads) is to publish new content frequently. A news site has legit new things to publish every day, but a non-news “content site” has to fish for new things to publish.

Because this had become a proven way to increase traffic, many sites that were created for other purposes (like news sites) have tried to capitalize on this by adding superfluous, SEO-optimized pages (e.g., ranking lists, evergreen stories, or entire pages that answer “is SNL new this week?” or “what’s the best way to get rid of this stain?”) to increase monetization. There’s a whole art to backlining and cross-posting that is all about squeezing extra Google traffic. Some decent news sites have leaned heavily into low-quality content creation for traffic growth. All this makes up the shitty part of the internet Google has created, as I mentioned above.

Some news sites did this a lot. Remember when Forbes did the secret MFA domain? It wouldn’t be surprising if some of their other pages are still lower-quality SEO magnets, and perhaps that is a factor in why it has lost the most traffic (52%) of all news sites on the graphic above. Lots of folks would be surprised how many extra junk webpages certain news sites publish to game Google SEO. It’s hard to blame news publishers for doing this given the many ways they have been screwed by Google, other ad tech companies, social media companies, and the rest of the advertising industry generally. If you were a revenue leader at a news publication and you needed to increase traffic 5, 10, 25%, SEO-based content creation represented one the few tools you could leverage.

I’m not saying that all news sites that are losing massive organic search or overall traffic are losing it because they create these junk pages. Business Insider, CNN, and HuffPost may be affected by other factors like their paywalls (BI’s is thick; CNN’s is new) or politics (CNN and HuffPost could also be victims of Democrats’ news fatigue). Some sites may be losing audience to creators.

But Google AI seems most likely to displace both 1) highly SEO’d content and/or 2) content that answers specific questions. Commoditized news stories (i.e., short stories that are just re-reporting of other outlets’ news, news you can get anywhere) are among that second type of content that answers specific questions, so to the extent news outlets have a lot of that, they are likely to lose traffic. Google incentivized outlets to create this content and is now displacing it with AI, but that is nothing new. Google is the ultimate ouroboros.

Unless you spend a lot of time examining the giant universe of websites that make up the internet, it’s easy to forget that this universe isn’t solely content sites that are responsive to AI queries. Other types of sites show up high on Google even without investing in a bunch of SEO, like when you type in the name of a business you want to visit or shop at (usually pulls up that exact business first, then some competitors),or when you are looking for a specific thing that only a few relevant sites have. For example, if you are looking for the Media Bias Chart but you don’t know our company’s name, if you search “media bias chart” in Google, everything comes to our sites & social media pages because our site is the only place you can find it (aside from a few knockoffs). Don’t forget, a lot of people just type the name of a thing in a browser instead of the homepage URL, so typing in “wsj”+ “enter” is technically an organic search that returns wsj.com as the first result, whereas typing in “wsj.com” would be direct traffic.

There are lots of sites on the internet that people go to because they can’t get the experience of that site elsewhere, and certainly not through an AI chatbot or summary. Sports sites and game sites are other examples. So are porn and gambling sites. Not all of these are the best places to advertise.

These kinds of sites are created with a purpose that’s not just driving traffic for advertising. That is, they are not just “content sites.” This is the good part of the internet (by “good,” I mean useful and purposeful, without judgment on the morality of what they are used for). Real journalism on the front pages of high-quality news sites are a big chunk of this good part. Yes, they are technically “content,” but they are more than that. They are a window into understanding what’s important in the world around you in real-time. They serve a purpose.

Because of the SEO incentives of the last 20 years, many news sites are a mix of good internet and shitty internet. But they are certainly not all equal in their mix. As the DCN study shows, news sites overall seem to be inherently less prone to AI traffic drops than non-news content sites, and I think that’s because they have news. Comparing the specific news titles to their relative traffic drops suggests, to me at least, that the ones that have the most high-quality, original, non-commodity journalism are the least prone to traffic drops (organic search and overall).

So what does the proliferation of AI mean for news sites, the open internet, and advertisers?

News site traffic may drop a little bit, but there will be a floor to that drop. I don’t think the doomsday “Google Zero” concept (the idea that Google referral traffic will eventually go to zero) is applicable to news sites. News publishers that want to insulate their traffic drops should double down on the content that they are uniquely positioned to create: high-effort, fact-reporting journalism. Anyone (including AI) can create “content,” and a lot of people can even create news-related opinion content. But journalists can create journalism, and that’s special.

AI search may significantly decrease the sheer number of long-tail, SEO-gamed, single-question answering, shitty internet pages because it reduces traffic to those pages and makes them not worthwhile to publish. But maybe not, because current and new publishers are already using AI for content creation, publishing millions more AI garbage pages for very little cost. Whether the net number of pages on the internet increases or decreases, the net real human audience visiting them will decrease, likely resulting in fewer visits per page regardless.

Overall, news sites’ quality will likely get better and keep steady or growing audiences while Google eats its long tail. AI companies — Google and the others — will grab the new inventory it creates on its interfaces for advertising purposes. The good internet will command a bigger percentage of the finite internet audience than the shitty internet.

People have a fundamental need for true news. The technological mediums and formats have and always will change (print, web, apps, linear, cable, podcast, CTV, YouTube, social media). The fragmentation and consolidation of the producers of that news means the information sometimes comes from one outlet of 1,000 people or 100,000 outlets of one person. But the audience for news has always been relatively stable, and so has the universe of people willing and able to produce it.

In this era of AI changing the internet, that means news sites will become one of the best and most durable places on the good internet, making up a bigger portion of it, with even more valuable ad inventory, than it does today. News outlets and advertisers should partner accordingly.

Vanessa Otero is a former patent attorney in the Denver, Colorado, area with a B.A. in English from UCLA and a J.D. from the University of Denver. She is the original creator of the Media Bias Chart (October 2016), and founded Ad Fontes Media in February of 2018 to fulfill the need revealed by the popularity of the chart — the need for a map to help people navigate the complex media landscape, and for comprehensive content analysis of media sources themselves. Vanessa regularly speaks on the topic of media bias and polarization to a variety of audiences.