Left and Right Agree – We’re Definitely Biased to the Other Side

Author:

Vanessa Otero

Date:

10/04/2023

It might not surprise you to learn that not everyone is a fan of Ad Fontes Media! In the past couple weeks we’ve gotten some less-than-flattering coverage from outlets that sit fairly far out on the bias axis to the left and the right. You may have seen our response to a segment by The Young Turks (TYT, which is in our Hyper Partisan Left, Opinion or Wide Variation in Reliability categories).

Most recently, Media Research Center (MRC), which is affiliated with CNS News, and NewsBusters, published an extensive report asserting that we are a “Media Literacy Firm Rigged to Favor Left-Wing Media.” We disagree, and although we probably won’t convince them, we would like to address specific claims outlined in their report and reiterate our commitment to being a non-partisan, politically balanced news ratings organization that actively works to mitigate its own biases.

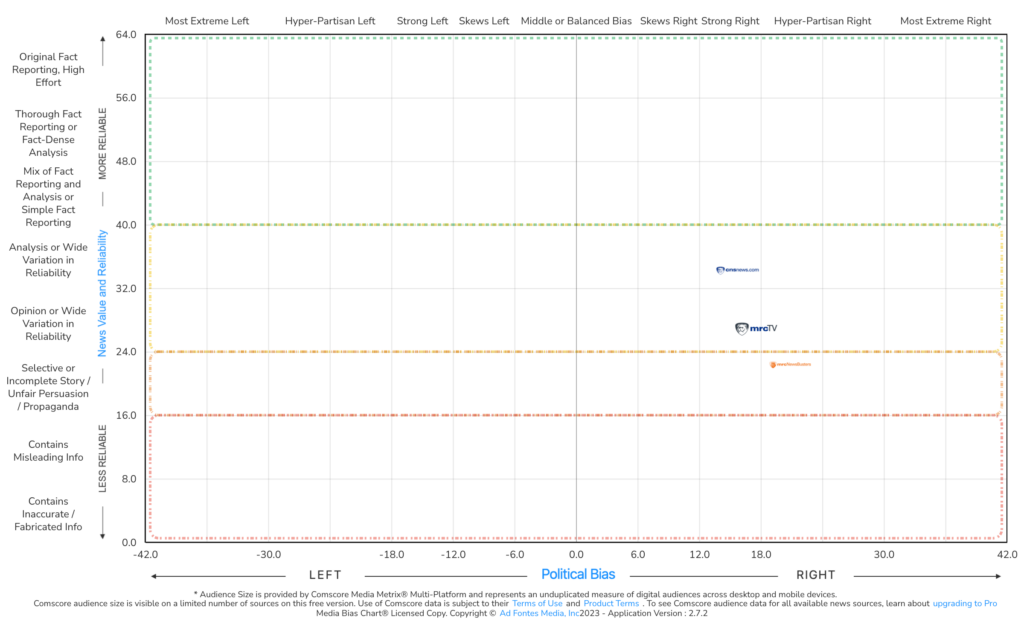

For context, MRC and its affiliate properties rate here on the Media Bias Chart:

A common thread between complaints from the left and the right is that their side’s content should be rated higher and the other side should be rated lower. We often field such complaints, which are typically accompanied by examples of offenses from the other side. Inherently, everyone brings a sample of content they think is persuasive. However, the problem is that everyone thinks their own sample is sufficient to make such determinations, but in reality, everyone’s samples are selected to make a point and not representative.

Our approach, when we receive complaints, requests for comment, or published criticism from publishers, is to try to talk to the publishers directly to see if we can explain our methodology, listen to any concerns they have, correct our mistakes when they’re called to our attention, and see if we can reach an increased level of mutual understanding, even if we ultimately still disagree on things.

A couple of reporters from Media Research Center (MRC, affiliated with CNS News, and NewsBusters) reached out a few weeks ago asking for comment on past political party donations by me and Brad Berens. Berens is one of our advisors and investors who has recently worked in a specific role as Head of Insights, through which he has written some research pieces about news and the ad industry. I responded to MRC’s request and suggested an in-person call. Jennifer Arvin Furlong, our Director of Communications and long-time analyst (who happens to be a distinguished Marine Corps veteran and politically conservative) also joined the call. We talked for over an hour, and though I left the conversation hoping that we had created greater mutual understanding, they still published a detailed report that dismissed our methodology as flawed, biased, and not truly committed to political balance.

To support their assertions that we are leftist and “rigged to favor left-wing media,” they rely on two main lines of evidence. The first line of evidence involves sets of statistics they calculated while the second line of evidence involves more personal assertions about our staff and our mission. This report from MRC contains numerous mischaracterizations of what we have actually said and done as an organization. Most of these are in the form of a strawman argument, where they looked at something we said or did, asserted we said or did something that sounds a lot worse but which we didn’t do, and attacked that thing. We won’t address each individual instance in this response for the sake of your attention span. But we would like to address the main contentions in the piece with which we disagree.

The first line of evidence purports to show that:

1) We rate a greater percentage of left-leaning outlets as reliable (64%) than right-leaning outlets as reliable (32%).

By their calculation they count anything that’s not rated as 0 on the bias axis as left-leaning or right-leaning, ignoring our “middle/balanced bias” +/-6 numerical range. Their calculations are incorrect. We consider sources rated 40 and higher to be reliable. As we calculate it, 1,623 (48.5%) sources that are rated as reliable are middle/balanced bias. Left-leaning sources that are rated over 40 include 204 (6.1%) followed by right-leaning sources that include 19 (0.6%). Our calculations confirm there are more outlets on the left rated as highly reliable than there are outlets on the right. The MRC however misconstrued these numbers to assert that they prove we must be biased.

MRC started their analysis with a conclusion and fit their analysis to support that conclusion. Our initial analysis supports the idea that more left leaning content is rated higher than right leaning content. However, there is no evidence to support the assertion that the analysts or organization is colluding to conflate those ratings. One possible explanation for this disparity could be there is a strong need for more right leaning content, which is a business opportunity. A second explanation points to a lack of diversity among journalists. A recent study showed the demographics of journalists are majority left-leaning, which emphasizes more right-leaning journalists are needed in the profession to increase representation and diversity of thought. A third possible explanation is that the right does not have the breadth of reporting on non-political topics (i.e. – science, culture, international affairs, etc.) that the left seems to have. We hypothesize any or all of these explanations impact their overall ratings within our methodology. All of these are just initial musings and would take additional ratings and research to explore them. We plan to continue our work and provide those findings to you.

2) We rate a greater percentage of right outlets low (29%) vs left outlets (2.9%).

Again, these stats are off because they are including outlets that are rated as “middle or balanced bias” as left-leaning or right-leaning when in fact, most of them are within the +/-6 to 0 range. Again, their numbers are incorrect. We consider sources rated 24 or below to be unreliable. As we calculate it, 6 (0.18%) that are rated as unreliable are middle/balanced bias. Left-leaning sources that are rated below 24 include 58 (1.73%) followed by right-leaning sources that include 211 (6.31%).

Just as with our explanation above, there is no evidence to support their assertion.

3) Our analysts are required by the CEO to conform to a single mindset, which results in them arriving at a single score “99% of the time.”

This is a mischaracterization of my statement. Our analysts are able to come within an acceptable numerical range 99% of the time. By our methodology, when rating content, our analysts will have a discussion and try to reach a consensus within an acceptable range (within an 8 point spread). This 8 point range is broad enough to allow for a variety of points of view while maintaining the standards of and adherence to the methodology. The analysts’ scores are then averaged together to create the composite score, which is what you see on the MBC.

Sometimes, our analysts have such different points of view, they cannot reach a consensus and the point spread is too wide. In these challenging cases, the pieces of content are forwarded to another pod of analysts to review.

Moreover, it’s important to note that in social science research and data gathering, which is where our work most closely falls, determining the validity or bias of a study is better determined by factors other than the results of the study. Things like the consistency of the inputs and control of variables are strong indicators of validity. In content analysis in particular, the granularity of coding and consistency of coders is a strong indicator of validity. The replicability of results when different people apply the process is also a strong indicator of validity. In our case, over 65 analysts from across the political spectrum have participated in our ratings and have replicated similar results to each other. This is not a signal of conformity as the MRC claims; rather, it serves to underscore the validity of our ratings.

In MRC’s first line of evidence, they attribute all unequal outcomes in our data solely to a perceived bias. Obviously, we disagree and have shown numerous mistakes and mischaracterizations in their report that result in a flawed analysis. We have highlighted the most salient errors in their calculations. The media landscape is not perfectly symmetrical. It is unrealistic to expect that it would be, for myriad reasons, and the MRC report conclusions overlook all of them.

Furthermore, because the results of the ratings are unequal to two sides, they conclude the process must be biased toward one side. This line of criticism suffers from a logical fallacy about cause and effect, also known as the causal fallacy. This is like arguing that because Russia, Romania, and the United States have won the most gold medals in women’s gymnastics, the judges must be biased to those countries. Or that because certain students score low on tests, the tests are biased against those students. Bias of the judges or a test is certainly a possibility, but there are other more likely possibilities. The results alone are not the best indicator of whether a process, methodology, study design, or test is biased. Between the miscalculations and illogical reasoning, I believe we have shown MRC’s first line of criticism to be fundamentally flawed.

The second line of criticism from MRC is regarding the personal political points of view of leadership and staff of AFM, calls into question our integrity, and dismisses our mission as smoke and mirrors.

The second line of evidence attempts to argue that:

1) Ad Fontes leadership “champions” a left-leaning point of view with the goal of telling Americans to trust “leftist media” and not to trust anyone on the right.

It’s probably a fairer game to pick on my political views given I am the leader of this organization, but as I often say, I don’t think you have to be a centrist or any other political position to be qualified to do this work. That belief in itself shows bias. Also, I’ve been refraining from any public partisanship since 2018, but like all of you, I had public political opinions and actions before Ad Fontes.

On the other hand, picking on Brad Berens’ political views is selective and lazy, because there are plenty of other people with as much involvement and influence on our organization with left, right, and center opinions expressed on social media, who give political donations, and are politically active in other ways. We strive to be non-partisan in what we put out as an organization, but we don’t require that people associated with or employed by our company stop publicly communicating their political views. We have team members and analysts from across the political spectrum who post their political views on social media and even have political podcasts. It’s true their online activities can open us up to criticism, but the tradeoff of asking any of our employees, advisors, or investors to stop sharing their political views to prevent criticism of our work is not worth it. We support free speech. We like that our analysts are politically engaged, regardless of their politics. Not to mention we specifically want people with political views in the first place.

Additionally, as we have seen many times in the news, people who want to criticize you can and will go back to what you have posted in the past to try and support their negative assertions about you anyway. Philosophically, Brad and I both believe that a person’s personal political views don’t automatically render that person as incapable of being fair to somebody with whom they disagree. It is possible for reasonable people to disagree and be fair to the people with whom they disagree. Many of us at AFM have had and continue to have strong personal and professional relationships with people on the opposite side of the political aisle. Indeed, AFM is built on the presumption that people who disagree politically can work together. Our analysts do it every day.

2) AFM analysts are nameless and faceless; therefore, calling into question AFM’s credibility.

While I can’t speak for any other news rating organization, I can tell you our organization believes in transparency. So much so, you can easily look up our advisors, directors, and our staff on the Team page of our website. Our analysts, with names, faces, and even bios, are listed there. We have staff who speak up every day on their personal social media platforms, commenting about our organization and their respective roles in it. Our analysts and staff have been guest speakers talking about our work at many different events from meetings hosted by local rotary clubs, to classes studying journalism, to conferences focused on digital literacy, to podcasts that are political and non-political alike. As a matter of fact, you can learn more about our external activities and speaking engagements on our blog and you can request a speaker for your event here.

3) AFM has federal government funding.

This is simply not the case. We have no funding from any government agency. We are a for-profit organization, and our revenue comes from the sale of products and services. To start and grow our operations, we have raised donation-based crowdfunding, equity-based crowdfunding, and venture capital from private investors who believe in our mission. Since 2018 we’ve raised $6 million thanks to individuals who care about our mission to improve the quality of news for everyone

We are proud that people from different backgrounds and experiences have invested in our company. Whether the amount is large or small, the individuals who have supported us believe in our mission. Our investors include people from across the political spectrum. They are teachers, librarians, industry leaders, experts, public servants, lawyers, and every other profession you can imagine. You can find more detail about that funding on our About page.

While we have solid representation from all political perspectives in our investor body, advisory and leadership body, it is quite difficult to achieve perfectly equal representation in those groups at all times, given that we don’t have control over which successful individuals step up to assist us. We do, however, have control over who we hire. We actively strive to maintain a balanced representation of political viewpoints among our core team and nearly perfectly balanced left-right-center representation where it matters most, in our analyst corps.

As you can see, MRC’s second line of evidence (like its first line of evidence above), falls short in substantiating any claims of left-leaning favoritism made in their study. We are not federally funded nor do we plan to be. Do we have leaders and staff and analysts with biased points of view? Of course we do. We are all human, after all. But we also believe that bias is not inherently bad. As a matter of fact, it’s pretty good for democracy. Also, our advisory board and those in leadership positions are not involved in daily operations such as ratings. Lastly, we believe in transparency and invite you to see who we are, what we do, and how we do it by checking out our website.

As of today, there have been several follow-on pieces from outlets on the right including Breitbart, Daily Wire, The Federalist, and the Mark Levin Show. The Daily Wire reporter reached out for comment, and Jen and I had a nice conversation with them, but despite our willingness to answer all their questions, they still wrote an article with several unfair assertions and mischaracterizations. NewsMax published a similar piece after they reached out and interviewed Jen for comments.

The main complaints from the MRC report and subsequent articles from the other right-leaning outlets are similar to the complaints from outlets on the left: our side is rated too low and the other side is rated too high, and it must be because AFM is biased against our side. Any accusations claiming that we rig our ratings to be unfair to one side or another are simply untrue. Because we actively maintain a politically diverse analyst corps, and use a methodology that is systematic, replicable, and valid, and constantly work in good faith to mitigate our own bias and improve our methods. We stand by our ratings.

If you’d like to keep up on all of the amazing work that we do, sign up for our free weekly email newsletter!

Jennifer Furlong, Director of Communications and Erin Fox-Ramirez, Director of Ratings and Research contributed to this response.

Vanessa Otero is a former patent attorney in the Denver, Colorado, area with a B.A. in English from UCLA and a J.D. from the University of Denver. She is the original creator of the Media Bias Chart (October 2016), and founded Ad Fontes Media in February of 2018 to fulfill the need revealed by the popularity of the chart — the need for a map to help people navigate the complex media landscape, and for comprehensive content analysis of media sources themselves. Vanessa regularly speaks on the topic of media bias and polarization to a variety of audiences.